How AI is Built: Part 1:

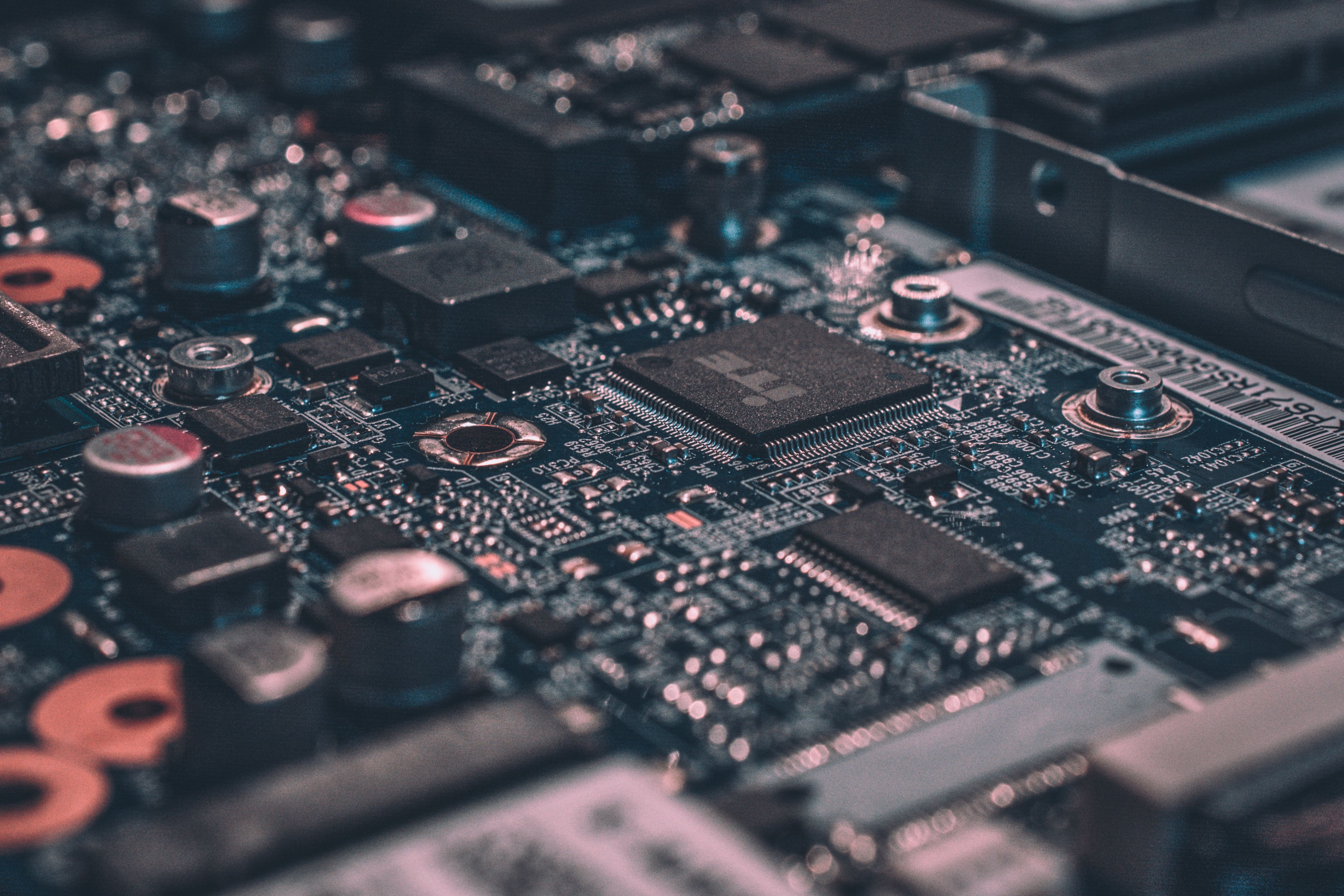

The Hardware That Powers Intelligence

We have talked about how AI is changing this industry and that industry, how corporations and institutions will assimilate it into their organizations, but we have never actually covered the foundation of it.

So, let's start fresh! Welcome to How AI is Built: Part 1.

The Brains Behind the Brains

At the heart of AI are processors designed for speed and efficiency. While your everyday desktop, laptop, or phone may run on a CPU (Central Processing Unit), training AI models requires something far more powerful: GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units).

Graphics Cards (GPUs)

Originally created for rendering video game graphics, GPUs are masters of parallel processing. Instead of handling one task at a time, they can run thousands of operations simultaneously. This made it a perfect tool for the kind of repetitive calculations AI needs.

TPUs (Tensor Processing Units)

Designed by Google, TPUs are custom-built chips created specifically to accelerate AI workloads. They make tasks like training large language models or running image recognition systems much faster and more efficient.

Building AI at Scale

Training today’s massive AI models is not something you can do on a home laptop. Companies like NVIDIA, Google, Microsoft, and Amazon run enormous data centres filled with racks of GPUs and TPUs, each connected to incredibly powerful servers.

These centres are sometimes compared to "AI factories" because they produce the computational horsepower needed to process billions or even trillions of data points.

This is why you often hear about huge investments in semiconductors and data centres. In a very literal sense, they are the very backbone of AI progress. For example, NVIDIA’s H100 chips are now in such high demand that they are sometimes called the “gold of the AI age.” There are even specialty manufacturers in some countries that modify factory setting GPUs to become AI powerhouses.

Why This Should Matter for You

Understanding the hardware side of AI gives you a clearer view of how this, now common technology, is built from the ground up. While there is quite a bit of clever coding involved, a big chunk of its brilliance comes about from its engineering. For students of computer science, computer engineering, or IT, this means opportunities not just in software development, but also in hardware design, cloud infrastructure, and systems optimisation.

The future of AI will be shaped as much by engineers building chips and servers as by programmers writing algorithms. If you are interested in joining this field, learning about the hardware side of computing is a great place to start.

Enjoyed it so far? Well, stay tuned for Part 2 of this series, where we will explore the software side of AI, how it is trained, coded, and refined into the intelligent systems we see today.